[German]Next data leak and security incident at Microsoft: Security researchers at Wiz have stumbled upon 38 terabytes of private internal data stored on an Azure Cloud Store instance via a public Microsoft AI GitHub repository. Access was enabled by a misconfigured SAS token, they say. This gigantic data leak also contained two Microsoft employees' backups of secret keys and credentials, as well as more than 30,000 internal Microsoft Teams messages.

[German]Next data leak and security incident at Microsoft: Security researchers at Wiz have stumbled upon 38 terabytes of private internal data stored on an Azure Cloud Store instance via a public Microsoft AI GitHub repository. Access was enabled by a misconfigured SAS token, they say. This gigantic data leak also contained two Microsoft employees' backups of secret keys and credentials, as well as more than 30,000 internal Microsoft Teams messages.

If there was any proof needed that IT security is slipping away from us and from Microsoft employees, it is now available. I've just been alerted to this incident by two blog readers via comment and on Twitter – thanks for that.

Microsoft AI researchers causes data leak

A team of Wiz security researchers is scanning the Internet for data hosted in the cloud that is mistakenly available for public access. As part of these activities, they also took a look at a public GitHub repository of the Microsoft AI research group. That's the place where interested developers are supposed to be able to download training data. But more was possible via the URLs given there for the downloads. The Wiz security researchers write that when releasing open-source training datasets, Microsoft's AI research team accidentally left the door to the vault open.

The security researchers made the incident public in a responsible disclosure in this blog post as well as on X in a series of tweets. Microsoft also has published this blog post at the same time.

What happened?

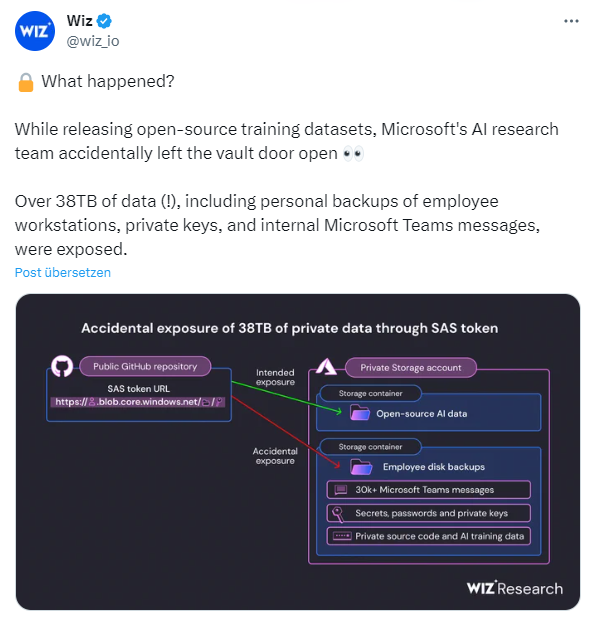

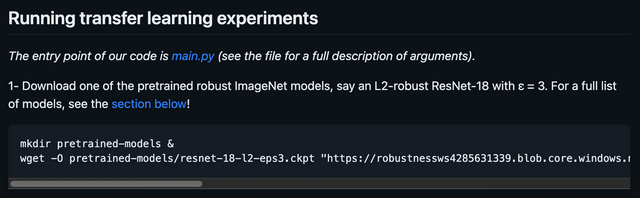

Via a GitHub repository, open source code and AI models for image recognition are made publicly available for download by the Microsoft AI Research Group to interested developers. Interested developers could download the models from an Azure Storage instance via a provided URL (see image below).

Nothing unusual as far as that is concerned; Microsoft has several such public GitHub repositories and they are also used. However, when the security researchers took a closer look, they found out that this URL included a misconfigured SAS token, which granted third parties the permissions to access the entire storage account.

In Azure, a shared access signature (SAS) token is a signed URL that grants access to Azure storage data, explains Wiz security researchers. The level of access can be customized by the user, with permissions ranging from read-only to full control. Here, the scope of access rights can relate to either a single file, a container or an entire storage account. The expiration time for accesses is also fully customizable, allowing users to create tokens with unlimited validity periods. This granularity provides users with a great deal of flexibility, but also carries the risk of granting too much access. In the most permissive case (as with Microsoft's token above), the token can grant full control rights to the entire account forever – essentially providing the same level of access as the account key itself.

The Wiz security researchers state that the SAS token of the URL to the Azure storage area not only allows access to the actually private data, but also granted full control. An attacker could have not only viewed all files in the Azure storage account, but also deleted and overwritten existing files. According to security researchers, an attacker could have injected malicious code into all the AI models in that storage account, and any user trusting Microsoft's GitHub repository would have been infected with it. The perfect supply chain attack on Microsoft AI training material and stored files.

A 38 TByte data leak

For the security researchers, however, what was more interesting in the first step was what could be found on the unintentionally accessible storage area. The Wiz team's scan of this account's storage instance revealed that it had inadvertently made 38 TB of additional private data publicly viewable.

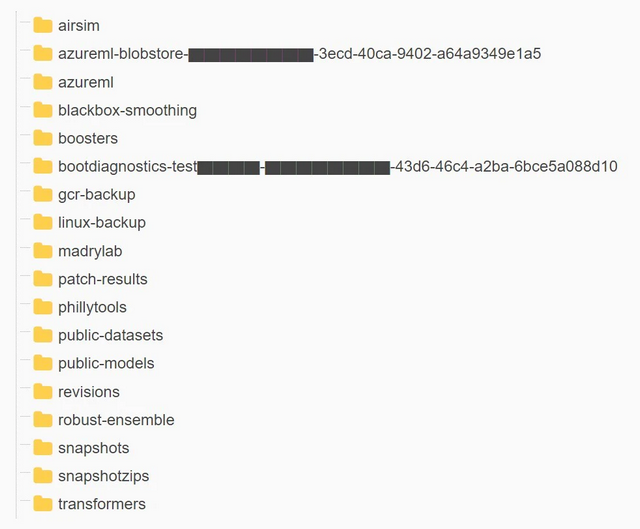

The tweet above and the schema embedded in it illustrate the issue, the image below shows the directory structure of the Azure Storage instance area that should be private.

The directories accessible via the GitHub repository contained the personal computer backups of two Microsoft employees. These backups contained sensitive personal data, including passwords to Microsoft services, secret keys and over 30,000 internal Microsoft Teams messages from 359 Microsoft employees.

Once again, that hit the jackpot with the crown jewels from the Microsoft vault – almost something like I described in the blog post Microsoft's Storm-0558 cloud hack: MSA key comes from Windows crash dump of a PC.

The security researchers at Wiz go into a lot more detail in their blog post, explaining the risks of using SAS tokens. The tokens are quick to configure, but account SAS tokens are extremely difficult to manage and revoke. There is no official way to track these tokens within Azure or monitor token issuance, according to Wiz. SAS tokens are created on the client side, and are not Azure objects. For this reason, even a seemingly private storage account can potentially be widely exposed. The perfect approach to chaos and incidents like this.

Interesting timeline of the incident

The SAS token was first posted on GitHub on July 20, 2020, with an expiration date set for October 5, 2021. Then on October 6, 2021, the expiration token was renewed and given an expiration date of October 6, 2051. No idea if anything is still "hurting" today's protagonists on that date, or if Microsoft still exists.

Wiz security researchers encountered the problem on June 22, 2023 and reported it to MSRC. At that point, the Azure storage instance had probably already been open to unauthorized parties for two years. Then it happened very quickly, just two days later, on June 24, 2023, the SAS token was declared invalid by Microsoft. On July 7, 2023, the SAS token will be completely replaced on GitHub. In the background, Microsoft's internal investigations are ongoing with regard to possible effects, which will be completed on August 16, 2023. On September 18, 2023, Wiz and Microsoft publicly announce the incident at the same time.

How Microsoft sees the incident

Microsoft has made the security incident public in the blog post Microsoft mitigated exposure of internal information in a storage account due to overly-permissive SAS token. The article says that Microsoft mitigated an exposure of internal information in a storage account due to overly lax access rights granted using SAS tokens.

They said they investigated and fixed the incident, which involved a Microsoft employee. The post confirms Wiz's account above and also admits that the data exposed in this misconfigured storage account included backups of two former employees' workplace profiles, as well as internal Microsoft Teams messages these two employees exchanged with their colleagues.

Microsoft emphasizes that no customer data was exposed, and no other internal services were compromised by this problem. No customer action was required in response to this problem – as I've heard from my blog readership in similar incidents, "You got off lightly." After all, Microsoft says it shares lessons learned and best practices in the post to inform customers and help them avoid similar incidents in the future. These explanations and "good advice from Microsoft on how to avoid something like this" can be read in the linked post.

Similar articles:

China hacker (Storm-0558) accessed Outlook accounts in Microsoft's cloud

Follow-up to the Storm-0558 cloud hack: Microsoft is still in the dark

Stolen AAD key allowed (Storm-0558) wide-ranging access to Microsoft cloud services

Microsoft as a Security Risk? U.S. senator calls for Microsoft to be held accountable over Azure cloud hack– Part 1

Microsoft as a Security Risk? Azure vulnerability unpatched since March 2023, heavy criticism from Tenable – Part 2

Microsoft's Storm-0558 cloud hack: MSA key comes from Windows crash dump of a PC