[German]On May 20, 2024, Microsoft presented its "Copilot+PC" (hardware with AI support and co-pilot). In my article Microsoft's AI PC with Copilot – some thoughts – Part 1 I presented my doubts, that there such machines are required. But there is more: Microsoft's "Copilot+PC" with the "Recall" function, which is able to take screenshots and analyze everything, is presenting a privacy and security night mare.

[German]On May 20, 2024, Microsoft presented its "Copilot+PC" (hardware with AI support and co-pilot). In my article Microsoft's AI PC with Copilot – some thoughts – Part 1 I presented my doubts, that there such machines are required. But there is more: Microsoft's "Copilot+PC" with the "Recall" function, which is able to take screenshots and analyze everything, is presenting a privacy and security night mare.

Will Recall become a security disaster?

Satya Nadella, Microsoft's CEO, said during an interview where he presented the AI PCs and was asked about people's concerns regarding this recall function: "You have to bring two things together. This is my computer, this is my recall, and it's all done locally. So that's the promise. That's one of the reasons why Recall works like magic, because I can trust that it's on my computer."

Users should be able to prevent the Recall function from taking screenshots if they are concerned about their privacy or do not want to use the function. In this German article, Martin Geuß has linked to the technical documentation for Recall, in which Microsoft talks a lot about "privacy". Martin writes that Recall takes and saves a screenshot every five seconds. And after the initial setup of Windows, Recall is switched on by default.

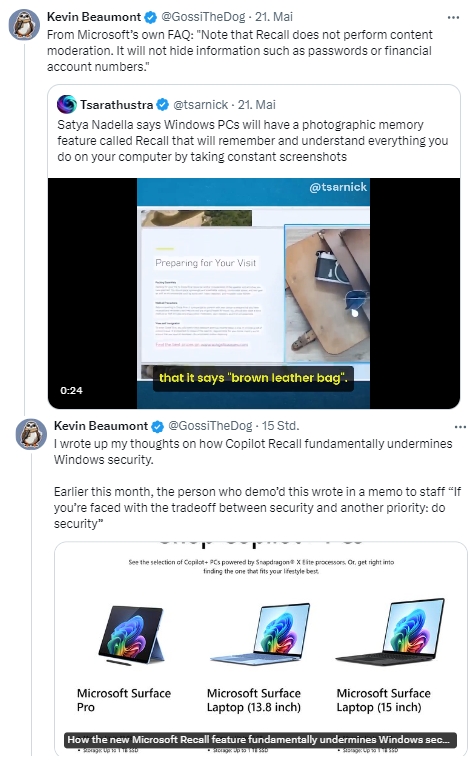

vx-underground, who deal with ransomware and security, put it in a nutshell in the above tweet: "Microsoft is introducing a 24/7 surveillance feature for the NSA and/or CIA, but marketing it as a feature that people will like". Nadella's interview is linked in the tweet. And security researcher Kevin Beaumont rips the concept of Recall as the dumbest thing ever in a series of tweets.

Currently, InfoStealer malware would store users' saved passwords. In the future, CoPilot Recall would be the "malware" that steals everything the user has ever typed or viewed on the computer and then stores it in an already created database. It is enough to simply pull the Recall database instead of just the local browser password database to access the crown jewels. Financial data, chats etc are out in the open and are very valuable.

Beaumont published this post on Double-Pulsar showing how Microsoft is undermining Windows security with Recall. In the above tweet, Beaumont alludes to Microsoft's promise to take security seriously in the future with the sentence "Earlier this month, the person who demo'd this wrote in a memo to staff "If you're faced with the tradeoff between security and another priority: do security"" (see Microsoft declared security as a top priority).

As Kevin Beaumont says: "Thank you, Microsoft, for paving the way for malicious hackers" and states on X that a simple PowerShell command is enough to turn Recall back on. And in this tweet, Beaumont writes that Copilot+Recall is now globally enabled in Microsoft Intune when using a Copilot+-compatible device. Users must deactivate the function manually in the user settings (see also).

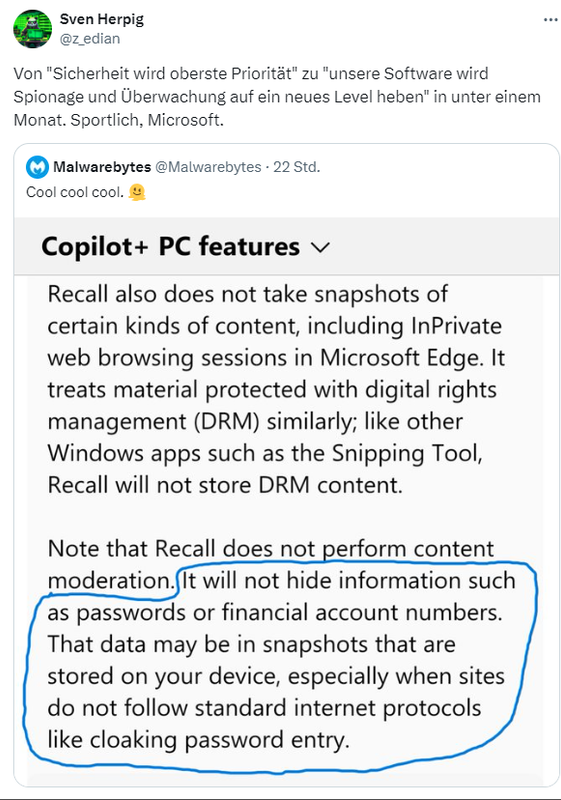

Malwarebytes also pointed out the dangers of Recall in a tweet, as everything is recorded. Mitja Kolsek from ACROS Security writes in a tweet "Something tells me @0patch will have [something for] many customers who want to stay with Windows 10 forever." ACROS Security offers micropatching for Windows so that the Windows 10 operating system can be operated securely after the official end of support for Windows 10.

Elon Musk had referred to Linux (see part 1). In this tweet, someone says that "Microsoft's latest dodge is a good reason to write off Windows. Switch to macOS (unless Apple is also planning this madness) or Linux," it also says. Microsoft has lost its bearings and no longer cares about the security or privacy of its users.

EU takes a closer look at Microsoft Bing / ChatGPT

DThe EU Commission has submitted a legally binding request for information to Microsoft against its search engine Bing. The request is for information regarding specific risks in CoPilot (based on ChatGPT) in relation to the Digital Service Act (DSA).

And Techcrunch points out here that Microsoft could face a fine of millions if it violates the DSA. The background to this is that the EU Commission had already requested information on systemic risks posed by generative AI tools from Microsoft and a number of other tech giants in March 2024. Last Friday (17.5.), the Commission stated that Microsoft had not provided some of the requested documents. However, in an updated version of the Commission's press release, the wording has been changed and the earlier claim that the EU had not received a response from Microsoft has been deleted. The revised version states that the EU is stepping up enforcement measures "following an initial request for information". The Commission has set Microsoft a deadline of May 27 to provide the requested data, it says. Something is still looming.

Co-pilot as a security / privacy risk

In this context, some information that a reader sent me by email at the end of April 2024 is also very revealing. The reader became aware of the "prompt injection" attack vector via a radio report.

The copilot running locally on your computer can be tricked by targeted prompt injection into disclosing/sending your data when you come across a prepared website. This malicious website is fed with instructions that are executed locally by the Copilot.

The reader has linked to the website "Who Am I? Conditional Prompt Injection Attacks with Microsoft Copilot" vrom 02.03.2024, which deals with the topic. Microsoft is aware of the problems, as can be seen in the Techcommunity article "Azure AI announces Prompt Shields for Jailbreak and Indirect prompt injection attacks" from 28.03.2024 and the article "Microsoft doesn't want you to use prompt injection attacks to trick Copilot AI into spiraling out of control, and it now has the tool to prevent this" from 01.04.2024. In its FAQs, such as here, Microsoft waffles around for an answer.

Final thoughts

Looking back at the two articles (Part 1 and Part 2) in my AI series, I can't help but get the impression that all this AI stuff is being thrown at users half-finished and that everything is moving in the wrong direction. Privacy and security seem to be secondary issues. I found the topic of copilot+PC and recall interesting. Initial articles in the US media were uncritical hymns of praise. But since yesterday, there have been more and more critical voices saying that it doesn't work at all.

jackpot with this move – or whether there are enough lemmings who will jump on this development. In my view, the "GDPR battles" surrounding Windows telemetry or Microsoft Office 365 are a mere breeze against the storm that is brewing with the AI solutions now being presented. Nadella may have led Microsoft into its biggest crisis since the departure of Steve Ballmer. Security is on fire and Microsoft has its back to the wall. Instead of changing course, they are paying lip service and now they are adding fuel to the fire with the recall function. This can only go wrong – it would be nice if I were wrong.

Similar articles

Microsoft's AI PC with Copilot – some thoughts – Part 1

Microsofts Copilot+PC, a privacy and security nightmare – Part 2

Microsoft has not "lost it"

M$ never "had it" !