[German]They do exist, useful examples of AI applications. The Project Zero team has now explained how it unleashed a Large Language Model (LLM) on software for vulnerability analysis in the "Big Sleep" project (the successor to "Naptime"). This uncovered a previously unknown SQLite vulnerability before it was incorporated into a generally released version. This shows the potential of AI for vulnerability analysis during software development.

[German]They do exist, useful examples of AI applications. The Project Zero team has now explained how it unleashed a Large Language Model (LLM) on software for vulnerability analysis in the "Big Sleep" project (the successor to "Naptime"). This uncovered a previously unknown SQLite vulnerability before it was incorporated into a generally released version. This shows the potential of AI for vulnerability analysis during software development.

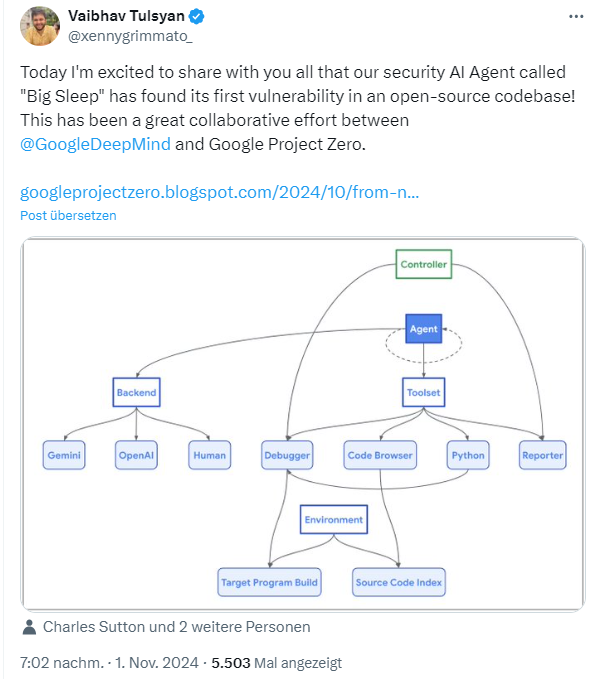

I came across this "Big Sleep" project yesterday via the following tweet and the disclosure of the vulnerability in the article From Naptime to Big Sleep: Using Large Language Models To Catch Vulnerabilities In Real-World Code.

Projekt Naptime

Google already had a Naptime project to evaluate the security capabilities of large language models (see Project Naptime: Evaluating Offensive Security Capabilities of Large Language Models). The project developed a framework for vulnerability research using LLMs and then tested it against Meta's CyberSecEval2 benchmarks. This allowed the framework and LLM to be improved to achieve peak performance in vulnerability analysis. Since then, Naptime has evolved into Big Sleep, they say. Big Sleep is the result of a collaboration between Google Project Zero and Google DeepMind.

Big Sleep uncovers SQLite vulnerability

The Project Zero team is now celebrating the first success of AI-supported vulnerability analysis on a real-world example during software development. Big Sleep was applied to the widely used open source database engine SQLite to check it for vulnerabilities.

The first real vulnerability was discovered by the Big Sleep agent based on Gemini 1.5 Pro. It is a stack buffer underflow in SQLite that could have been exploited. The discovered vulnerability was reported to the developers at the beginning of October 2024. They fixed the vulnerability on the same day. This vulnerability was probably found in a beta version of SQLite, so it was never included in an official version. Therefore, SQLite users were not affected by the discovered vulnerability.

This attempt was inspired by the discovery of a null pointer dereference in SQLite by Team Atlanta at the DARPA AIxCC event in early 2024. The Google team then took a look at SQLite in their framework and the AI also discovered a vulnerability.

The team believes that this is the first publicized case where an AI agent has managed to find a previously unknown, exploitable security problem in the memory of widely used, real-world software. The team sees great potential in the detection of vulnerabilities in software by AI before this software is even released. This prevents attackers from discovering vulnerabilities in products retrospectively. Details can be found in the linked article by the Google team.