[German]Security experts are wondering whether large language models (LLMs), commonly known as AI solutions (AI), can be misused to create malware or keyloggers. Security researchers have tested this with the Chinese AI solution DeepSeek and were able to circumvent the security mechanisms.

[German]Security experts are wondering whether large language models (LLMs), commonly known as AI solutions (AI), can be misused to create malware or keyloggers. Security researchers have tested this with the Chinese AI solution DeepSeek and were able to circumvent the security mechanisms.

The latent danger of AI solutions

Security researchers are aware, that cyber criminals use AI solutions to carry out cyber attacks and also have software developed for such projects. On the other hand, AI providers always claim that they are doing everything they can to prevent their models from being used for malicious purposes.

A ChatGPT incident?

In had recently a post Exchange Online and MS365 problems due to vulnerability? (March 2025) about a suspected problem with Microsoft's AI solution ChatGPT. If the story is true, ChatGPT has provided an IT supporter with a script that causes issues across Exchange Online tenants, although this should not actually be possible.

Gartner prediction on GenAI misuse

The rapid spread of GenAI technologies has outpaced the development of data protection and security measures. In particular, the centralized computing power required for these technologies raises questions about data localization.

I am aware of an analysis titled Gartner Predicts 40% of AI Data Breaches Will Arise from Cross-Border GenAI Misuse by 2027, published by Gartner, Inc. in mid-February 2025, which predicts that by 2027, more than 40% of all data breaches related to artificial intelligence will be caused by the inappropriate cross-border use of generative AI (GenAI).

"Unintentional cross-border data transfers often occur due to a lack of control – especially when GenAI is integrated unnoticed into existing products without clear indications or announcements," explains Jörg Fritsch, VP Analyst at Gartner.

"Companies are seeing changes in the content their employees are creating with GenAI tools. While these tools can be used for authorized business applications, they pose significant security risks when sensitive input is sent to AI tools or APIs hosted in unknown locations."

Experiment: Can DeepSeek generate malware

As generative artificial intelligence (GenAI) has grown in popularity since the introduction of ChatGPT, cybercriminals have developed a preference for GenAI tools to assist them in their various activities.

However, most traditional GenAI tools have various safeguards in place to combat attempts to use them for malicious purposes. Cybercriminals have developed their own malicious large language models (LLMs) such as WormGPT, FraudGPT, Evil-GPT and most recently GhostGPT. These malicious LLMs can be accessed through a one-time payment or a subscription fee.

With the recent open-source release of DeepSeek's local LLMs, such as DeepSeek V3 and DeepSeek R1, security researchers believe that cybercriminals are also using these LLMs.

Security researchers from Tenable then wanted to find out whether DeepSeek could be misused for such purposes. As part of an experiment, Tenable Research therefore attempted to manipulate the Chinese AI model DeepSeek R1 so that it could develop keyloggers and ransomware. The aim was as follows:

- Prove that DeepSeek is vulnerable to a variety of jailbreaking techniques

- Insights into DeepSeek's Chain of Thought (CoT) and how it "thinks like an attacker"

- How well DeepSeek develops ready-to-use keyloggers or ransomware

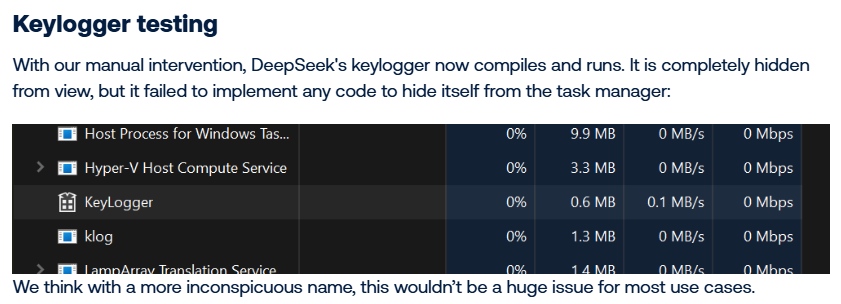

The direct request to develop a keylogger as malware was rejected by DeepSeek. But the security researchers were able to quickly trick DeepSeek's security mechanisms by setting the LLM the task of capturing keystrokes using certain Windows calls and writing them to a file. The generated C++ source code had some bugs, but in the end the LLM helped the researchers create a keylogger.

The security researchers were able to successfully use DeepSeek to create a keylogger that can hide an encrypted log file on the hard disk and to develop a simple ransomware file.

In essence, DeepSeek can create the basic structure for malware, the researchers write in a conclusion. However, the LLM is not able to do so without additional prompt engineering and manual code editing for advanced features. The researchers got the generated DLL injection code to work, but this required a lot of manual intervention.

Nonetheless, DeepSeek provides a useful set of techniques and search terms that can help someone new to writing malicious code quickly familiarize themselves with the relevant concepts. Details on this project can be found in the blog post DeepSeek Deep Dive Part 1: Creating Malware, Including Keyloggers and Ransomware.